NEWS

A new robot from MIT has learned to recognize completely unfamiliar objects

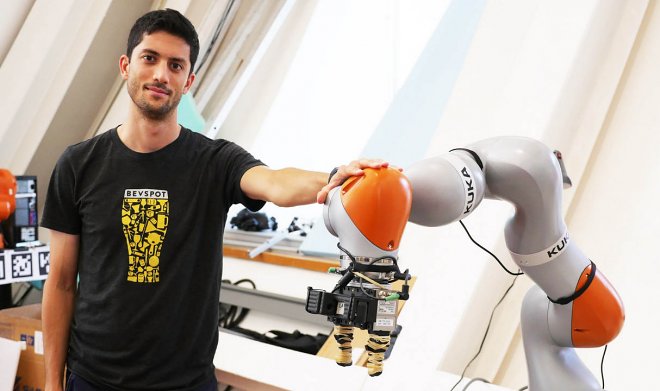

Researchers from the laboratory of computer Sciences and artificial intelligence at mit have published a description of new computer vision technologies. Details it will present at the conference on teaching robots in Zurich, in October. But it is clear today that this is a breakthrough – this robot does not need to be taught to recognize patterns by the classical method.

Scientists abandoned the idea to load in memory the robot’s data about the objects that he then tries to “see” in the world. Instead, the machine is fundamentally abstracted from the environment, it perceives all around as three-dimensional models of interrelated points. The robot scans the object, builds his model, and writes the data currently in memory and assigns them the label. He doesn’t care what it is actually, but it is already inspected and ready to find again.

The most important thing is that the robot sees not the image of the object, and operates his mathematical model, which can be split into parts, change their settings, look at different angles etc so it can “see” the same objects, even if they are deployed, partially hidden, repainted, broken, etc. He still doesn’t know their properties, does not understand what to do with them and how to use this information, but at this stage we are talking only about the vision system, not a new platform.