NEWS

Google has learned to realistically transfer a person into a virtual environment

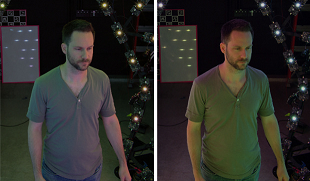

Engineers from Google have learned how to create a realistic model of a moving person and embed it in a virtual space, changing the lighting of the model accordingly. They created a stand with several dozen cameras and hundreds of controlled lighting sources, inside which is a person.

The system quickly changes lighting and shoots a person from different sides, and then combines these data and creates a model that describes with high accuracy both the shape of the body and clothes, and their optical properties. An article will be presented at SIGGRAPH Asia 2019.

The technology of motion capture and the creation of virtual avatars has been used for several years in various fields. Some of them (for example, those that are used when shooting films) are based on capturing precisely the movements of the face and other parts of the body, the recordings of which are then used to animate another character. In cases where it is important to maintain the appearance of a person, systems from multiple cameras are used.

According to nanonewsnet.ru, some developers in this area have managed to achieve fairly high-quality results. For example, Intel uses a system in some sports stadiums that allows you to play replays from any angle. However, such systems are not able to collect data on the optical properties of objects and the model obtained with their help cannot be realistically transferred to an environment with other lighting.

A group of Google engineers, led by Paul Debevec and Shahram Izadi, created a film stand and software that allows you to create a model that realistically reflects both the shape and optical properties of a person in motion, as well as transfer this model to another environment and adjust the lighting to fit it.

© All rights reserved. Citing to www.ict.az is necessary upon using news